arivis Scientific Image Analysis

Close

- Products

- Resources

- Publications

- Case Studies

- Mitochondria Analysis with Deep Learning

- Compartmentalization of Chromatin

- Gastrulation & Migration Phenotypes

- Advanced 4D Tracking of Meiotic Spindle Dynamic

- Migration of Interneurons in Developing Mouse Brain

- Exploring Whole Organisms at Single-cell Resolution

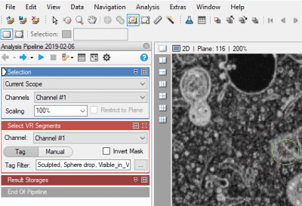

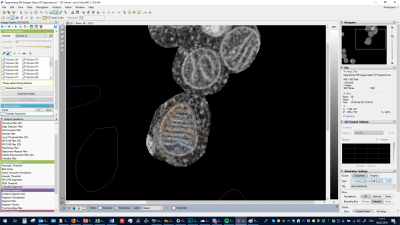

- Image Analysis Workflow for EM images

- Webinars and Workshops

- Tutorials

- AI eBook

- AI Blog

- How to's

- Service & Support

- About

- Products

- Resources

- Publications

- Case Studies

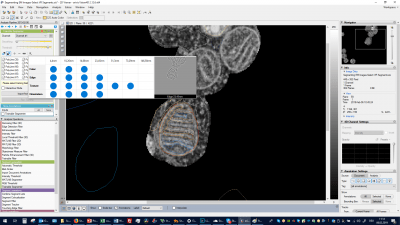

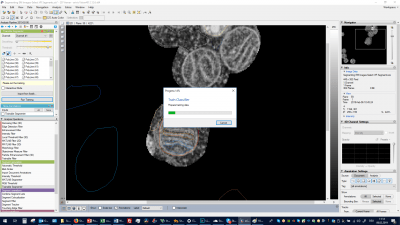

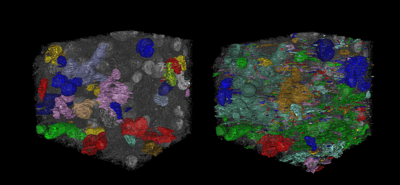

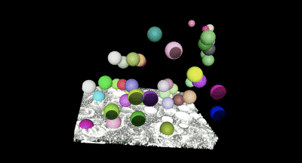

- Mitochondria Analysis with Deep Learning

- Compartmentalization of Chromatin

- Gastrulation & Migration Phenotypes

- Advanced 4D Tracking of Meiotic Spindle Dynamic

- Migration of Interneurons in Developing Mouse Brain

- Exploring Whole Organisms at Single-cell Resolution

- Image Analysis Workflow for EM images

- Webinars and Workshops

- Tutorials

- AI eBook

- AI Blog

- How to's

- Service & Support

- About

-min.png?width=400&name=1%20(1)-min.png)